Conversational User Interface for Outdoor Safari

Conversational User Interface for Outdoor Safari

November 2017

We made a concept video of a conversational user interface for a Safari Trip. The smart car can now detect animals with its technologies while act human to be a tour guide.

Task

- Think of a use case for a vitual voice assistant embedded inside a autonomous car

- Film a concept video of this use case, so that people understand why having a conversational user interface in that product may be a good idea.

What I did

- Led the group brainstorming discussion for a good scenario

- Facilitated the experience prototyping

- Wrote the first draft for the script

- Drew for every graphics in the concept video using Illustrator

- Voiced for the video

Challenges

- What is the boundary between a voice assistant in the phone and an embedded voice assistant in the car?

- How to not make the voice assistant disturb people from what they are doing in the car?

What I learned

- Pitch for the uniqueness of your idea, the idea that justify the innovation. Do not reinvent a simply better wheel.

- Think about the potential alternatives of your idea: What might people do if they don't have the technology but want to do the same thing?

- Dramatic acting during the prototype can amplify the potential problems

- Keep calm in the discussion :)

Concept Video

The concept video in the final deliverable. I drew all the assets and voiced the girl.

Task

Unlike traditional graphic interfaces, Conversational User Interfaces (CUI) do not require any visual attention, and thus fit the best with the situations when the users are visually occupied.

In this class project, we were supposed to model a CUI for autonomous vehicles. Specifically, we need to find the best use case for CUI in this context, capture the moment where CUI is the best solution, turn it into a concept video (filmed or animated) and present it to our potential clients.

Throughout the project, we followed the double diamond rule of a standard design process: we first run competitive analysis and domain researches for various driverless cars, existing conversational user interface technology to extract our its essential user experiences throughout the interaction. After that, we explored the potential ideas through our scenario write-ups, and then extract out the final scenario through iterations.

I grouped with two other people, both master students, in this project. We also consulted many other people, including other master HCI students and our instructors, to critique the project.

Procedure

We conducted several background researches to compare the functionalities between some existing CUIs, such as Cortana and Siri. Note that most of the existing CUIs are generic and not designed specifically for autonomous vehicles. Our final concept needs to appeal to driverless car manufacturers who would see the marketing potentials on CUI, instead of just telling their customers to plug their smart phone in.

Me and another teammate brainstorming on the white board.

We generated about 10 exploratory scenarios, keeping into account the usability and usefulness of the CUI. The interaction was purely conversation-based and did not involve any graphical user interface like car screens, laptop or mobile phone. Following are examples of scenarios where CUI holds very important value:

- Police patrollers identifying criminals

- Tourists with language barriers

- Parents picking up their kids from school of children

- Navigating blind passengers

We believed those are use cases that either users have their visual input blocked/occupied, or conversational communication works better as it can detect users’ manner of speak.

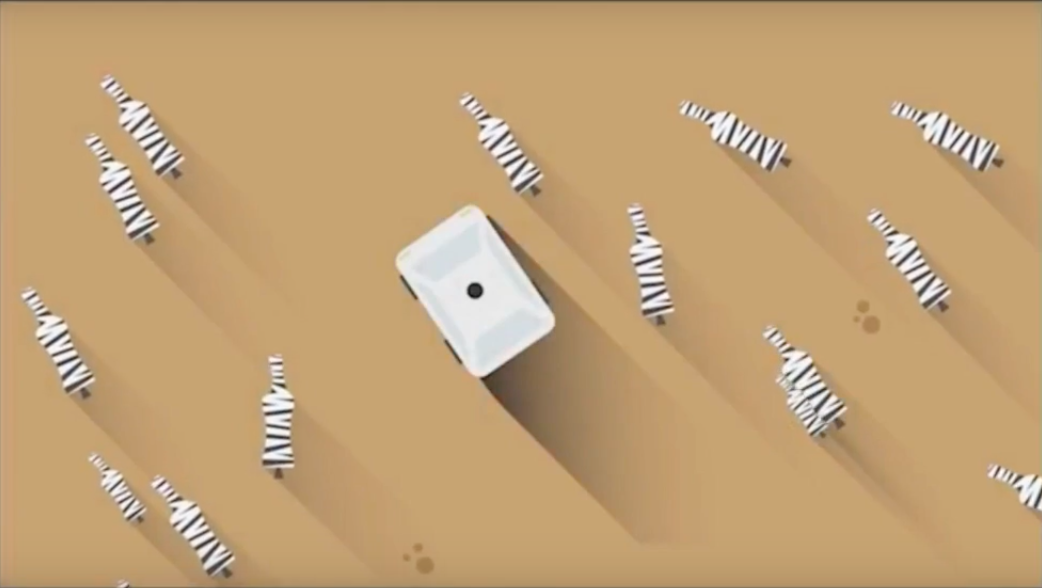

Screenshot from the final video. The car with the conversational user interface is running on the African grassland. We believed this is the best scenario unique to the nature of a moving space (the car) that cannot be replaced by other technologies.

We realized that our scenarios are mostly written to showcase the vastly diverse activities it can support, instead of merely how our CUI is being considerate for the user demand. Henceforth, we use the following two statements as our guideline:

- We shall investigate into activities where a CUI will be a huge boost. Activities that do not require a huge cognitive load on the user, but are critical for the CUI to come in and take over.

- We also don't want our CUI to be merely another Alexa in the car. We want the CUI to control the car with the input it receives. The input can bea query from the passengers, or an environmental trigger.

We came up with a tired travelling user taking a nap on the driverless car, who was waken up by the CUI for an important decision making: animal migrating across the road, reroute or not? Through the class feedback, we realized that the CUI is unnecessarily waking the user up for a trivial decision making, and thus we added a third guideline:

- The users should have their own goal in the car, and the CUI's sole purpose is to facilitate whatever goal the users have.

We narrowed down to the scenario of a Wildlife Safari after many iterations. When the technology of conversational user interface is combined with the self-driving technology, it can be used to create value for users in ways that were not possible before.

Video Making

A leopard in the grass, waiting to prey.

We happened to be the only team using animations - it is almost impossible to recreate an African grassland in real life. We thought about using LEGOs, but it cannot show the in-car details we think would be necessary in our case. I was confident enough at my graphic skills, and another teammate volunteered to animate the drawings.

When the presentation is over, the class bursted into laughters, and clapped for half a minute. I loved it.

Check out more

Transportation Hub Info Screen

Design for an environmental display in a small transportation hub that connects flights, ferries and trains.